OHMC2020

Move over Cybertruck, in 2020 we're building self-driving DingoCars!

The Open Hardware MiniConf (OHMC) is a 1-day event that runs as a specialist stream within the linux.conf.au conference.

Interest in Open Hardware is high among FOSS enthusiasts but there is also a barrier to entry with the perceived difficulty and dangers of dealing with hot soldering irons, unknown components and unfamiliar naming schemes. We use the assembly project as a stepping stone to help ease software developers into dealing with Open Hardware. Plenty of instructors will be on hand to assist with soldering and demystifying any questions around hardware assembly.

NO PRIOR HARDWARE EXPERIENCE REQUIRED! That is, after all, what this session is all about! To get the most out of this, some basic knowledge of Linux commands will be helpful. (ssh, running commands, navigating the filesystem)

Participants learn hardware skills by assembling their own electronic project which includes soldering and understanding how circuits work, as well as hearing interesting talks about various projects and techniques related to the morning project and to open hardware in general.

Before the day

Registration and cost

STOP! Please read the information below before wildly clicking through to the application form!

To attend you must first register for the main conference, which provides the venue. The Open Hardware Miniconf is part of LCA as a specialist stream for conference attendees: it's not a stand-alone event. See linux.conf.au/attend/tickets for more information.

Places for the assembly session are strictly limited, so registration is required and there is a fee to cover the cost of materials. Even if you ticked the "Open Hardware MiniConf" box on the LCA registration form, you still need to apply for the miniconf separately if you wish to participate in the assembly session.

The registration process for the Open Hardware MiniConf is different this year compared to previous years.

Instead of a direct registration form, we’re using a 2-step process. This will help us avoid the registration rush that usually has the event sold out within a few hours.

This will also allow us to do a better job of balancing out the allocation of different types of tickets, including normal tickets, supporter tickets, and subsidised tickets, to encourage greater diversity. The result should be more fair than a simple race to the rego form like in previous years.

So take a deep breath, and relax. It’s not a race this year!

Step 1: Submit the application form using the link below. We’ll collect applications and then assign spots according to the different types of tickets.

Step 2: We’ll follow up this coming weekend to let you know if you have a ticket assigned. If you do, you’ll receive details of how to submit your payment and receive your ticket.

Please note that the application form includes a question about whether you identify as belonging to an under-represented group. The reason for this is to allow us to prioritise tickets for people who would otherwise not be well represented at the event, to improve the overall diversity.

Of course, this is a very difficult thing to define, and you may be wondering if this applies to you. We don’t want to be in a position of making a judgement call, so we’re leaving it up to your discretion.

The cost of the assembly session is AU$140, which includes all the parts required for you to build your own Perception Module and fit it onto a converted R/C car chassis to make a self-driving model car. Some subsidised places are also available for those who couldn’t otherwise afford to attend. The actual value of the parts provided is approximately AU$260, but thanks to a generous benefactor the cost has been kept low in order to allow more people to participate.

All registered LCA attendees are welcome to come along and watch, or attend the talks, without additional registration.

Where is it?

Tuesday January 14, 2020 Room 8.

What to bring?

A laptop that has an SSH client.

Want to present?

We have slots for 30 minute talks, as well as 5 minute lightning talks.

Submit your talk proposal on the LCA open hardware miniconf page.

Got something to share about your experiences with Open Hardware or Maker life? New to open hardware and want to tell us your achievements? An old hand and want to tell us your gritty stories of failures, successes and lessons learned? Not sure if your idea is suitable? Submit it anyway and we can talk with you about whether it'll fit in.

Schedule for the day

The miniconf is split into morning and afternoon sessions.

| 10:40 - 12:20 | Assembly Workshop (registration required to participate, spectators also welcome) |

| 12:20 - 1:30 | Lunch |

| 1:30 - 1:55 | How the Perception Module hardware works: John Spencer |

| 1:55 - 2:20 | Running Tensor Flow on the Perception Module: Andy Gelme |

| 2:20 - 2:45 | Donkey Cars at Yarra Valley Tech School: Matt Pattison |

| 2:45 - 3:10 | ESP32 memory management: Marc Merlin |

| 3:10 - 3:50 | Afternoon Tea |

| 3:50 - 4:15 | IoT energy monitor using Linux capable host CPU: Tishampati Dhar |

| 4:15 - 4:40 | WEMOS Minis are such a timesaver - or am I doing this wrong?: Sven Dowedeit |

| 4:40 - 4:55 | Creating a Kubernetes cluster using LattePandas: Brian May |

| 4:55 - 5:30 | Lighting Talks (5 minute blocks) |

How the Perception Module hardware works

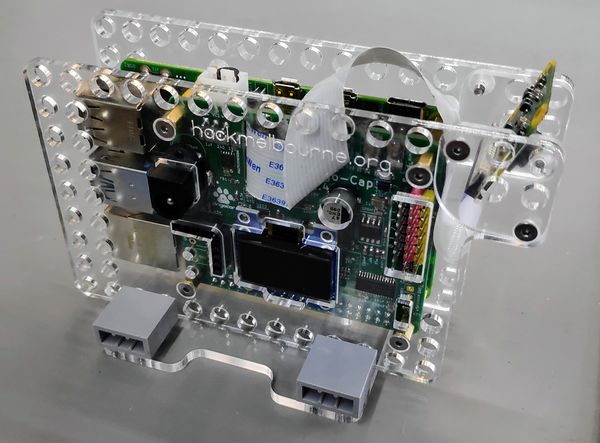

Presenter: John Spencer Learn how the Dingo Cap works with a Raspberry Pi to provide power management and I/O capabilities for machine-learning projects.

Perception Module software

Presenter: Andy Gelme Run Tensor Flow on a Perception Module to control a self-driving model car.

Donkey Cars at Yarra Valley Tech School

Presenter: Matt Pattison Yarra Valley Tech School uses Donkey Cars to teach students about software and electronics.

ESP32 memory management

Presenter: Marc Merlin Microcontrollers such as the ESP32 present new challenges to developers who are accustomed to gigabytes of RAM and memory management handled by the OS.

IoT energy monitor using Linux capable host CPU

Presenter: Tishampati Dhar This talk discusses the design of a DIN Rail Energy Monitor with a Linux host processor. IoT systems are becoming a part of everyday life and some are built with little regard for security or privacy. As an electronics engineer I set out to design an IoT energy monitoring system that would be open-source and have openly reviewed firmware enabling users to audit the security and privacy features and possibly send data to their own IoT Storage platforms hosted within their private network. This talk also contains a deep dive in the linux kernel module for SPI drivers.

The initial iterations of the design used ESP8266 and then ESP32 as the host processor. The firmware has evolved from Arduino Wiring/C++ to Micropython. My recent efforts have been focused on transitioning a to full-linux capable host processor while delegating the real-time processing and energy computations to ASIC's and FPGA's with ADC extensions. The energy monitor specific code running on the Linux CPU is envisaged to be written in Python for ease of portability and iteration.

WEMOS Minis are such a timesaver - or am I doing this wrong?

Presenter: Sven Dowedeit I was roped into helping FollyGames, an emersive theatre and performance group, to build distributed actuators to add to their experiences. Initially, to rescue a performance at the Museum of Brisbane, using Raspberry Pi, audio and NFC, and then over time, adding neopixels, UHF RFID, buttons, motors, servos, and more.

In and attempt to head off the joys of last minute ideas, I've been leaning heavily on using WEMOS Minis and the shields that you can buy on AliExpress.

Assembly Project: Perception Module and DingoCar

Each year we help attendees build a project specially developed for the Open Hardware Miniconf.

The project for 2020 is the Perception Module, a flexible TensorFlow platform that you can build into your own machine-learning projects.

It includes a Raspberry Pi 3B+, a camera, onboard power management, support for both NiMH and LiPo batteries, servo outputs, a 128x64 pixel OLED display, NeoPixel output, and connections for a sonar sensor.

The Perception Module is a direct descendent of the project from OHMC2019, with the brains of the Donkey Car encapsulated in a self-contained module that even has Lego-compatible holes to help you build it into your own projects.

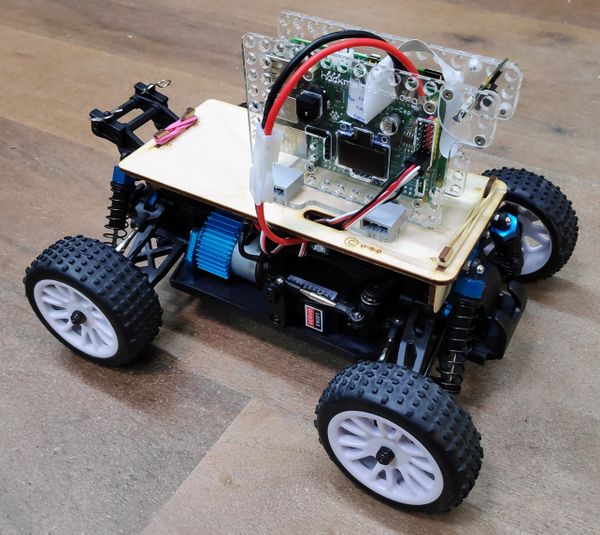

At the OHMC we will assemble our own Perception Modules and then fit them onto converted R/C cars to build the delightful DingoCar, a machine-learning self-driving car.

DingoCar is a fun project but it's only one example of how you can use the Perception Module. After the event you can take your DingoCar and Perception Module home with you, and either continue to use them together or build other projects of your own around the Perception Module.

Some things are pre-built (a custom board to hook up to the Raspberry Pi that has connections for the camera, the servo motor for the engine and the steering) and we'll be attaching some components to the board using soldering, wiring, magic and luck.

The hardware kit includes:

- RC car chassis

- Raspberry Pi model 3+

- Raspberry Pi Camera

- Custom chassis parts for mounting

- Voltage regulator, PWM output controller, and other required parts

We'll then connect wirelessly to train the car by driving it around a track, then once the computer has processed this model data, we will unleash our DingoCars to drive themselves!

Yes, you will need your own laptop to participate in the assembly project to handle the training and control of your DingoCar. Setting some items up in advance will give you more time in the session for the hardware, and provide some relief to the conference wifi.

More information and instructions are available at:

Beyond LCA2020

We want all participants to be able to do more with their car and their perception model. Whether it's using the car and training it up to handle different situations (chase the dog), or adding extra electronics (play a sound if it gets stuck, or flash lights when it's turning), or using the perception module in other circumstances, all things are possible!

We have a section for OHMC alumni to take you further.

Credits

OHMC is mostly run by Andy Gelme and Jon Oxer, with able assistance by their team of robots. 2020 also has the following human assistants: John Spencer, Andrew Nielson, Bob Powers and Nicola Nye. We also rely on a big team of helpers on the day who provide hands-on guidance to attendees, teaching assembly techniques and soldering, and answering questions, who include: Cary Dreelan, Matt Pattison, Mark Wolfe.