OHMC2020 Software instructions

Overview

Overall, the steps involved are:

- Connect to your car, start up the driving management software

- Drive the car on a track (through the web interface, or some sort of external controller) and record the session.

- Download the data onto your computer

- Process the data into a self-driving model (either on Google Colab or on your local environment)

- Put the model back on the car.

- Start the car in self-driving mode.

From a software perspective, the DingoCar (Raspberry Pi) is self-contained ... driving, data acquisition (for training) and ultimately self-driving are all performed with on-board software. The provided micro-SD card already has all the required software pre-installed, as well a pre-training A.I / Machine Learning model for the OHMC2020 track. The DingoCar software includes a web-server that provides a web interface that works on both desktop and mobile web browsers (which is adequate for driving).

But you need your own laptop to connect to the car (and possibly train the Neural Network, using the data acquired on the DingoCar). Google Collab or your laptop is used to train the Machine Learning model, because the Raspberry Pi is way too slow for training Machine Learning models.

Once you have built your car and tried the prepared software and Machine Learning model, you are strongly encouraged to acquire your training data, train the Machine Learning model and try self-driving the car using your model.

The OHMC2020 project is effectively a general purpose image detection / recognition module, which can be easily detached from RC car base. We hope that you try training the car on situations other than just two-line tracks, e.g train by driving down the sidewalk. We especially hope that you experiment with applications that don't involve the RC car, e.g make a pet door that has been trained to only open for your pet !

Software environment: Laptop / Desktop

For easiest, and fastest Machine Learning model training, you can use software on Google's Colab to process your car data in the cloud (free GPUs for everyone !). This means you only need to have on your computer:

- ssh: to connect to your car. (Windows users, it's now an optional Microsoft update or install putty).

- scp: to copy files to and from your car (Windows users: putty comes with pscp which is equivalent)

- a google drive account

If you'd like a local build environment on your computer, check out our quickstart guide, or use the detailed and thorough instructions on the DonkeyCar website.

Initial car setup

Your car is already preconfigured to access the LCA2020 Wi-Fi access point. It will display its IP address (among other data) on the OLED screen. You can use this IP address to know where to connect to (via SSH).

First: update the password away from the default!

ssh pi@<IP_ADDRESS> with the default password raspberry. Change this once you log in, using passwd command.

Update your car with the latest software updates from your delightful OHMC organiser team.

$ cd ~/play/ai/dingocar/utility $ git pull $ sudo ./upgrade_to_v0.sh

Driving your car manually

Caution: Put your car "on blocks" (wheels off the ground) the first time you try driving it

- ssh pi@<IP_ADDRESS>

- cd ohmc_car

- python manage.py drive

loading config file: /home/pi/play/roba_car/config.py config loaded PiCamera loaded.. .warming camera Starting Donkey Server... You can now go to http://xxx.xxx.xxx.xxx:8887 to drive your car.

With a desktop web browser, the user interface provides a virtual joystick (right-hand frame) that you can use to drive the car ... altering the steering and throttle values.

In the mobile web browser, the user interface allows you to drive by tilting the phone left-right for steering and forwards-backwards for throttle. For safety, you must press the [Start Vehicle] / [Stop Vehicle] toggle button to enable control.

Further DonkeyCar docs on driving

Acquiring training data

We have preloaded a model on your car so you can cut straight to letting the car drive itself if you'd like.

Once you are driving your car confidently around a track, it is time to acquire training data for the Neural Network. DingoCar operates at 10 frames per second, capturing a 160x120 image, along with steering angle and throttle value. This is all stored in the $HOME/ohmc_car/data/tub_xx_yy_mm_dd directory.

Before training, it may be helpful to clean out previous data in the $HOME/ohmc_car/data/ directory.

Perform the same commands as for manual driving:

- ssh pi@$IP_ADDRESS

- cd ohmc_car

- python manage.py drive

Then via the web browser press the [Start Recording] button, drive the car around a track, then press the [Stop Recording] button.

It is recommended that you collect between 5K and 20K frames. At 20 frames per second, that is between 500 and 2,000 seconds of driving (6-33 minutes). Practically speaking, you can get away with 5-10 minutes. Make sure that you drive clockwise and anti-clockwise! Just like a human: the more practice in different conditions you can give your car in training, the better it will drive for itself.

When finished acquisition, then transfer the data from the DingoCar to your laptop / desktop for training the Neural Network.

- export $DATE=yy_mm_dd

- First ssh into your car and create a tarball of your data for easier transfer

- tar czvpf tub_$DATE.tgz ohmc_car/data/tub_$DATE/

- Then, on your computer, copy the data from your car, back to your computer.

- If you're using google colab, you can just copy the data to any directory you'd like to use.

- scp -pi@<car_ip>:tub_$DATE.tgz .

Turn your data into an AI model

Once training data has been copied to your laptop / desktop, you can begin training the Neural Network. You can train your data on Google Colab, or on your local environment.

Google Colab instructions

This is convenient because it's fast, doesn't require a big setup time on your local computer, and gives you more time playing with your car and less time dealing with installation and configuration. Also, if you don't have a GPU, it's significantly faster using Google's computer power to generate the model than it is to do it on your own.

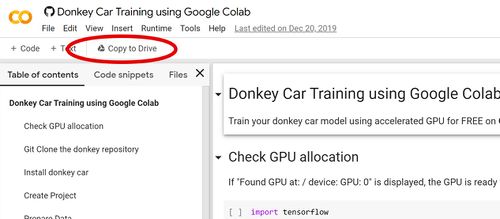

- Log into your google account (or sign up on the spot)

- Go to the google colab site

- Click the 'Copy to Drive' button to make a copy for your own use.

The left hand panel has information, the right hand panel is where the operations happen. It's a sequence of steps that you can run, and modify. The sequence of instructions walks you through uploading your data from your car to your google drive account, then running it through the colab machine learning model generator. Every time the web session ends, your session on Google colab also ends and you need to restart operations from the start.

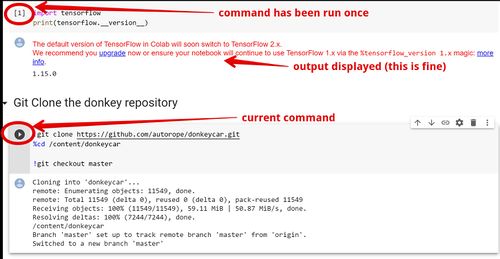

Click on each instruction to execute it.

Note: Once you have reinstalled tensorflow you will need to restart the runtime as instructed.

Once a command has been run, it shows the output.

Remember your data file you copied off your car? You need to upload it to your Google drive. The Google colab script expects the tub data to be in a directory on GDrive called mycar.

Once you've finished the script and copied the .h5 file back into your Google Drive, you can get it back down to your laptop and from there, onto your car.

- scp mypilot.h5 pi@<IP_ADDRESS>:ohmc_car/models/model_$DATE.hdf5

Further DonkeyCar info on training

Training on your computer

If you'd like a local build environment on your computer, check out our quickstart guide, or use the detailed and thorough instructions on the DonkeyCar website.

Further DonkeyCar info on training

Letting your car drive itself!

Once your trained model has been copied back onto the DingoCar, your car can be self-driven as follows:

- python manage.py drive --model ~/ohmc_car/models/model_$DATE.hdf5

Here's one we prepared earlier! If you want to test out your car with a model we made earlier, you can use a model we have pre-loaded onto our car.

- python manage.py drive --model ~/ohmc_car/models/model_2020-12-12_lca2020.h5

This works similar to the manual driving mode with the addition of a trained model that can either:

- User: Manual control of both steering and throttle

- Local Angle: Automatically control the steering angle

- Local Pilot: Automatically control both the steering angle and throttle amount

The web browser provides a drop-down menu to select between these options.

It is recommended to just start with "Local Angle" and control the throttle manually with the "i" key (faster) and "k" key (slower).

Troubleshooting

See the OHMC2020 Troubleshooting guide.

What next?

Configuring wifi networks

To connect to your car when you leave LCA, you want to be able to join it onto other networks. It's a good idea to configure it to talk to your phone's hotspot, so that you can access your car anywhere. Run raspi-config which will bring up a UI from which you can add more networks.

If you need to configure a new network but can't get into your car to do so, you can

- plug in an external monitor (there's an HDMI port) and USB keyboard, or

- configure your Pi "headless" (without a monitor/keyboard), by taking out the SD card, plugging it into a computer and creating a wpa_supplicant.conf into the SD's root directory with the appropriate username/password of the network. !! This will override any existing networks configured on your RPi. !! - [Headless instructions]

Configuring timezone

You may also want to update your timezone when you get home, this can also be done in rasp-config.

Steering and throttle calibration '

To save time at the workshop, you won't need to calibrate your car's steering and/or throttle. However, you may get better results and can perform calibration when you have time.

DonkeyCar instructions on calibration

See what your car sees

AI is not magic. You'd think it's looking at the lines on the ground, until you check out its image of what it's really paying attention to. Once you've generated a model, you can get your dingocar to generate a movie showing how it's used your training data to come up with its model.

%cd ~ohmc_car

donkey makemovie --tub data/{tub_name} --model models/{model name}.h5 --type linear --salient

This puts tub_movie.mp4 into the current directory which you can play. It shows two velocity lines: one blue and one green. The blue line is the predictions, the green line is the actual data. The lines show throttle through line length, and steering is the angle of the line. The highlights on the movie show the inputs the car is paying attention to for it to make its decisions.

Do more with your DingoCar

A DingoCar is for life, not just for the OHMC at LCA. There's so much more you can do with it. Check out our Beyond DingoCar section.

Dive into docs

There's extensive DonkeyCar documentation if you're looking for more detailed installation or configuration instructions.

Background information

How your Raspberry Pi image is built

Your DonkeyCar (Raspberry Pi) is already pre-installed. This section is for reference only.

Extensive DonkeyCar documentation

Step 1: Copy Raspberry Pi microSD card image and shrink with GPartEd to 6 Gb

- See https://elinux.org/RPi_Resize_Flash_Partitions

- See https://softwarebakery.com/shrinking-images-on-linux

Step 2: Configure wifi (without needing external kb and monitor)

- Raspberry Pi: Headless Raspberry Pi setup with wifi

- See https://styxit.com/2017/03/14/headless-raspberry-setup.html

cd /Volume/boot

touch ssh

vi wpa_supplicant.conf

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

network={

ssid="YOUR_SSID"

psk="YOUR_WIFI_PASSWORD"

key_mgmt=WPA-PSK

}

ssh pi@raspberrypi.local # password: raspberry

Step 3: Upgrade Raspberry Pi firmware, operating system and packages

raspi-config # Keyboard, locale, timezone, minimal GPU memory, enable I2C sysctl -w net.ipv6.conf.all.disable_ipv6=1 # You will need to reconnect via ssh afterwards sysctl -w net.ipv6.conf.default.disable_ipv6=1 # IPv6 disabled to ensure apt works properly (and is off everywhere, for clarity) apt-get update && apt-get upgrade rpi-update *** Updating firmware *** depmod 4.19.93-v7l+ *** If no errors appeared, your firmware was successfully updated to b2b5f9eeb552788317ff3c6a0005c88ae88b8924 sudo reboot uname -a Linux 4.19.93-v7l+ #1286 SMP Mon Jan 6 13:24:00 GMT 2020 armv7l GNU/Linux apt-get install rcs

Step 4: Install DonkeyCar dependencies

apt-get install build-essential python3 python3-dev python3-virtualenv python3-numpy python3-picamera python3-pandas python3-rpi.gpio i2c-tools avahi-utils joystick libopenjp2-7-dev libtiff5-dev gfortran libatlas-base-dev libopenblas-dev libhdf5-serial-dev git

Step 5: Install optional OpenCV dependencies

apt-get install libilmbase-dev libopenexr-dev libgstreamer1.0-dev libjasper-dev libwebp-dev libatlas-base-dev libavcodec-dev libavformat-dev libswscale-dev libqtgui4 libqt4-test

Step 6: Install OpenSSL

mkdir /tmp/openssl cd /tmp/openssl wget https://www.openssl.org/source/openssl-1.0.2q.tar.gz tar -xvpf openssl-1.0.2q.tar.gz cd /tmp/openssl/openssl-1.0.2q ./config make -j4 sudo make install

Step 7: Install Python 3.6.5 (takes a while) for TensorFlow 1.14

apt-get install libbz2-dev # for pandas ! cd ~/downloads wget https://www.python.org/ftp/python/3.6.5/Python-3.6.5.tar.xz tar -xpf Python-3.6.5.tar.xz cd Python-3.6.5 vi Modules/Setup.dist # Uncomment the following lines ... SSL=/usr/local/ssl _ssl _ssl.c \ -DUSE_SSL -I$(SSL)/include -I$(SSL)/include/openssl \ -L$(SSL)/lib -lssl -lcrypto ./configure --enable-optimizations make -j4 sudo make altinstall reboot python --version sudo rm -rf Python-3.6.5.tar.xz Python-3.6.5

Step 8: Setup Virtual Env

python3.6 -m venv env echo "source env/bin/activate" >> ~/.bashrc source ~/.bashrc

Step 9: Install Donkeycar Python Code

cd ~/play/ai git clone https://github.com/autorope/donkeycar cd donkeycar git checkout master pip install cython numpy pip install -e .[pi]

cd ~/downloads wget https://www.piwheels.org/simple/tensorflow/tensorflow-1.14.0-cp36-none-linux_armv7l.whl pip install tensorflow-1.14.0-cp36-none-linux_armv7l.whl # Requires Python 3.6 python >>> import tensorflow as tf >>> print(tf.__version__) # 1.14.0 cd ~/play/ai git clone git@github.com:tall-josh/dingocar.git cd dingocar git checkout master

Step 10: Install optional OpenCV

- Build Python OpenCV from source code (if desperate) ...

- See https://www.pyimagesearch.com/2018/05/28/ubuntu-18-04-how-to-install-opencv

apt install python3-opencv # For Python3 cd ~/env/lib/python3.6/site-packages ln -s /usr/lib/python3/dist-packages/cv2.cpython-37m-arm-linux-gnueabihf.so cv2.so python >>> import cv2 >>> print(cv2.__version__) # 3.2.0

Step 11: Configure PS/3 Controller

apt-get install libusb-dev cd ~/play mkdir sixpair cd sixpair wget http://www.pabr.org/sixlinux/sixpair.c gcc -o sixpair sixpair.c -lusb

Connect PS/3 Controller via USB cable

sudo ./sixpair

Unplug PS/3 Controller

apt-get install git libbluetooth-dev checkinstall libusb-dev apt-get install joystick pkg-config cd ~/play git clone https://github.com/RetroPie/sixad.git cd ~/sixad make sudo mkdir -p /var/lib/sixad/profiles sudo checkinstall sudo sixad --start cd ~/play/ai/donkeycar/donkeycar/parts vi controller.py # modify PS/3 map cd ~/play/ai/roba_car vi myconfig.py CONTROLLER_TYPE='ps3'

Step 12: Miscellaneous

- Power-down button

vi /boot/config.txt dtoverlay=gpio-shutdown,gpio_pin=13